Introduction

As a tech startup founder, product manager, or product engineer, we often hear the same mantras: focus on your customers, understand your competition, and master every aspect of your product. If you are building a product on top of gen AI, you might have also heard about adding value with AI, not AI as value. What you don't hear often is honest case studies. At least we didn’t, when after reading about text-davinci-002 (GPT-3) model one and half years ago, we built an ai feature that evolved into a chat in a mobile app. This experience preceded entering the AI field full speed, launching humy.ai mvp and raising a pre-seed round for it. Before humy, our team also built facing-it.com, hellohistory.ai and engaged in 2 AI consultancy projects. In total, we have served more than 200 000 customers. It's not a lot, but enough to share some of our learning.

During this journey we have following useful principles and made mistakes. If you are curious what they are, welcome to the blogpost, otherwise, feel free to re-share it.

Principles We Follow

1. Accept and communicate LLM limitations

Honeycomb team's experience with LLMs was summarised by their pm in a blogpost “All the Hard Stuff Nobody Talks About when Building Products with LLMs”:

The reality is that this tech is hard. LLMs aren’t magic, you won’t solve all the problems in the world using them, and the more you delay releasing your product to everyone, the further behind the curve you’ll be. There are no right answers here. Just a lot of work, users who will blow your mind with ways they break what you built, and a whole host of brand new problems that state-of-the-art machine learning gave you because you decided to use it.

My opinion is not different; however, I want to develop this idea slightly further. LLMs are not magic: they hallucinate, they are slow, they have cultural biases, and their context is limited. Accept these limitations, communicate their implications to your users, and you will be rewarded. Many LLM-based product ideas will only work in demos; some of them should not be launched into production. However, there is a fraction of good ideas on which you can build a very impactful product and a company. Our job as product builders is to find, shape, launch, and iterate these ideas.

Accepting limitations might mean involving a domain expert for product evolution or limiting the scope of the LLM-based feature. One of the things we did was the use of disclaimers and limiting the content in the app:

In December 2022, we launched Hello History, an app that lets you chat with historical figures. It gained traction. When we launched we had competitors, most of them powered by the same LLM model, and offered similar functionality. Despite criticism for the inaccuracy of the text responses, Hello History was recognized as a teacher-friendly and safe product partly because we did the following:

- When we launched, we had 3 disclaimers about inaccurate responses: on the website, during customer onboarding, and before each chat.

- We didn’t include controversial historical figures like Hitler or Stalin because we knew we couldn’t guarantee the quality of the responses.

- On top of it, we tried to provide educational value beyond the chat itself.

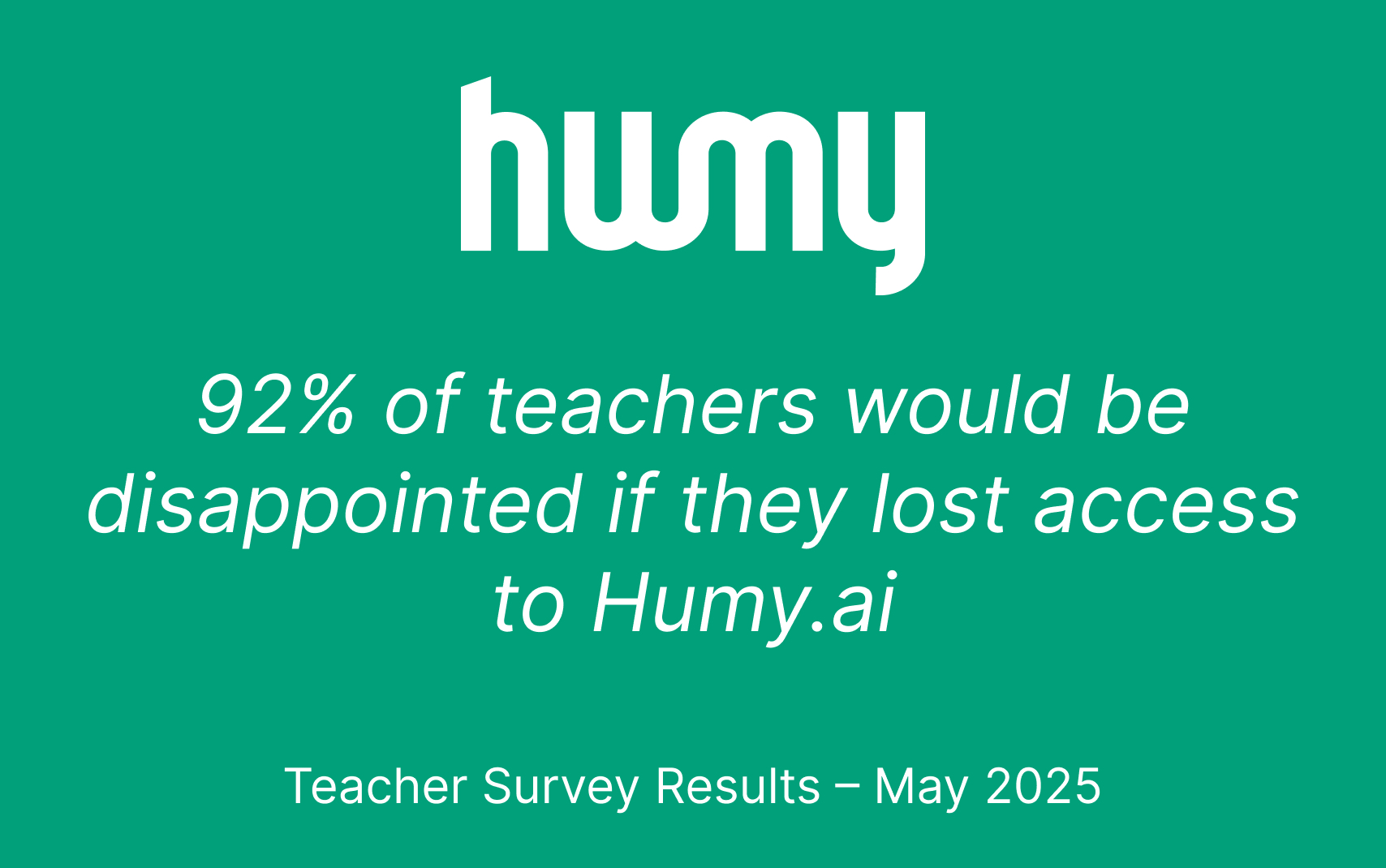

We follow this principle in humy.ai too. For instance, in the automated school assessments, we will present the score as “suggested” and the teacher will be able to correct it if they don’t agree with it. Also, we have a teacher in the core team who works closely with the product team.

In conclusion, I want to share a belief that communicating limitations is not only the right thing to do when working with emergent technology like LLMs but is also helpful in developing trust, which is very important for user retention.

2. Use API creatively

Humy platform and Hello history app enable chats with historical fiugres, we use prompt enginering to archive that. After migrating from Davinci-002 to ChatGPT API, we noticed that using the open-ai system prompt to set the personality for the AI was not sufficient.

For instance, "You are Alan Turing" worked only until the customer asked the AI to pretend to be someone or something else. This issue still persists, even in GPT-4 model. After a few trials and errors, we found a solution. Instead of using the chat API as intended, we discovered that sending the message history in one message and asking ChatGPT to extend the dialogue works quite well:

{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are an AI that continues dialogs."

},

{

"role": "user",

"content": "

What follows is a dialog between a student and a Alan Turing.

Dialog

Turing: How can can I help you?

Student: What is your take on generative AI?

What will be the response from Turing?

Turing:

"

}

]

}'

The prompts we run in production are more complex than this, but we are still using this idea. In our experience, every third customer tries to break the personality of historical figures in the Hello History app or Humy, but nobody has reported that they have succeeded—perhaps you will be the first one?

I am sure there will be other problems solvable by using the OpenAI API in a non-standard way.

3. Choose infrastructure wisely

OpenAI's API can experience fluctuations, as indicated on their status page, and you wouldn’t want your product experience to degrade when this happens.

Almost from the start, we decided to use the Azure OpenAI Service instead, available at https://azure.microsoft.com/en-us/products/ai-services/openai-service.

Pros of using Azure OpenAI are:

- Security: Azure OpenAI offers the security capabilities of Microsoft Azure while operating the same models as OpenAI.

- Scalability: You can deploy multiple instances of LLM models and handle more requests per second compared to the OpenAI API (unless you enter a special agreement, often not available to small startups). We have deployed two GPT-4 instances, which helped us manage traffic without backup strategies. Moreover, we can launch up to nine additional GPT-4 instances within minutes if needed.

- Azure Credits: If you are a VC/incubator-funded startup, you might qualify for up to $150,000 in Azure Credits from Microsoft, all of which can be allocated towards OpenAI model tokens.

- Reliability and Speed: Our experience suggests that Azure's service is faster, probably because you can deploy the models closer to the region of your users and don’t share them with other OpenAI users.

Further reading:

- Azure OpenAI FAQ: https://learn.microsoft.com/en-us/azure/ai-services/openai/faq

- Comparison of OpenAI and Azure OpenAI: https://drlee.io/openai-vs-azure-openai-a-deep-dive-into-their-differences-26f1107677e

Other tech decisions we made:

- We don't extensively use LangChain. While we use chunking algorithms, all prompt-related operations are managed manually for simplicity of code modification.

- Using typescript for fronted, backend, scripting, and data engineering. You can do a lot with high-level programming language and even more when each team member can work with every component of the system.

- Keeping things simple, sometimes extremely simple. We believe that each architectural decision should enable fast iterations today and scale for the next six months. We don't do kubernetes or micro-frontends because it will slow down product development and add cost for maintenance at our scale. Our frontend is powered by next.js, our backend is build on top of azure functions, we use auth0 for user management and auth.

Having a reliable infrastructure that enables fast iterations is crucial when working with an emerging tech in a startup mode.

4. Launch quickly and iterate

Our team has adopted an MVP mindset for launching new products or features. Even with a solid concept and consensus in the team, our priority is to validate it quickly. We typically don't commit to features requiring more than a month to develop, aiming to launch something new every two weeks.

This approach has been long advocated by startup and product leaders, as highlighted on Y Combinator. The AI field is no exception to it, where new products emerge daily.

It’s important to balance it with the first principle of safety. Given AI's profound potential societal impacts, especially in sensitive sectors like education, it's important to consider limitations. When a feature goes live, we actively seek feedback from educators (from the team or our power users) to gauge their reactions. This direct engagement allows us to refine and improve our product in subsequent versions, ensuring it meets the needs of both teachers and students.

5. Bring value with AI, not AI as value.

At Humy.ai, we prioritize conversations around the tangible benefits technology can bring — be it customer time saved, pain alleviated, or positive experiences created. Our most successful features have been the result of reflecting on past successes or talking directly with our users on a video call, rather than mere technological speculation behind closed doors.

For example, the development of our automated school assessments and tools for teachers was preceded by extensive discussions with educators. These conversations helped us understand the intricacies of school processes and evaluate the potential time savings our product could offer each teacher.

The feedback from investors reinforces our approach: the market is saturated with AI chatbots and AI chatbot builders. Some argue that developing AI for its own sake is a misallocation of time and resources—unless you can offer a solution that is significantly better than ChatGPT or others.

Mistakes We Made

1. Implementing RAG in-house is not trivial.

As with many other ai startups, we incorporated RAG to let our customers extend the GPT inference with their own knowledge. Our implementation, designed to work with diverse inputs like PDFs, text files, web links, and plain text, initially seemed promising. However, the real-world usage surfaced challenges, reflective of the complex nature of implementing RAG. In fact, the problem is complex enough that other startups and companies are building features or products to solve just it (https://learn.microsoft.com/, https://carbon.ai/, https://nuclia.com/, and others)

Our initial plan was a straightforward:

- The frontend collects inputs from users.

- These inputs are bundled into a multipart request and sent to our backend.

- The backend then chunks and uploads these pieces into our vector database.

- For user queries, the AI retrieves the three most relevant chunks from the database to enhance its responses.

This approach, however, struggled in production:

- Large documents introduced issues with timeouts and request size limits.

- The task of processing and chunking large documents is computationally intensive, particularly in JavaScript.

- The necessity for different parsing and chunking strategies for various document formats demanded either very clever algorithm or clever filtering of user inputs - building generic chunking is difficult because each file is different.

- Open-source tool we used for web link scraping is slow and doesn’t work for all links.

Recognizing the limitations of our initial approach, driven by a combination of our MVP mindset and the absence of experienced backend developers in our team, we are now discussing better strategies:

A. Adopting a third-party RAG provider: a third-party service could streamline the process, with a focus on asynchronous processing and implementing clever chunking mechanisms.

B. Re-implementation in-house: By narrowing the scope of accepted document formats and adopting an asynchronous processing model, we could better align our features with user needs and technical feasibility.

If problems like this excite you, send us a message - we are hiring a backend developer.

2. Making GPT-3.5 reply in a specific format wasn’t always possible.

Attempting to coerce GPT-3.5 into generating responses in a specific format to trigger actions within our chat application proved to be unreliable. We tried to archive that before the launch of functions and JSON response format. This experience underscored the limitations inherent to large language models (LLMs) APIs and served as a valuable lesson in the importance of aligning technological capabilities with our product vision. In the end, we decided to change how the feature works. As with any technology, not everything is possible with LLMs.

3. Recursive summarization loses too much information.

To overcome the context limitations of GPT-3 and enable a form of long-term memory within one of our LLMs products, we implemented recursive summarization. It worked by adding a summary of the conversation before the messages in the chat history prompt.

This approach led to significant information loss/corruption with when adding messages. The AI would summarize the conversation and rely on this condensed version for future interactions, which, as expected, resulted in reduced accuracy and increased instances of hallucination with each summarization cycle. As a lesson, we should not relay on methods that work in demos, without good evidence of working in live products.

4. Not focusing on our customers enough

After getting traction with the text chat interface we tried to bring real-time head animation to our products. The idea sense conceptually and we had a developer who had built prototypes in the field of video generation. The plan was clear - let’s merge an open-source talking head model, 3d engine, lipsync model, and TTS API; have a GPU cluster with auto-calling and webrtc; load balancing and web admin app for customers on-boarding. In two months we built a prototype that looked poorly (lipsync was a problem) and wasn’t commercially sustainable. Then we noticed that other teams were trying to do something similar, but even the big players like Nvidia were struggling to launch a commercial product with the characteristics we envisioned. We dropped this idea and accepted that spending this time doing more customer interviews, designing, coding, and validating features with text interface would have been a better use of time.

We learned that the AI field is full of appealing problems, but solutions for them can be very difficult, and even if you know how to solve some of them, it doesn't mean you need to do it in-house.

5. Gen AI is a race

Little did we know about the AI startup field when we added our first features powered by GPT-3. Like many other startups, we initially found ourselves competing with other startups. But then OpenAI entered the race by introducing the ChatGPT app, AI Assistant API, functions, enterprise plans, and a marketplace. OpenAI has its own competitors like Google and Anthropic. Microsoft is building products on top of OpenAI models too. Also, the open-source community is very active and other big tech companies have begun to catch up. The field is very alive. Everyone is either thinking about or building gen AI. Using GPT-3 wasn't a mistake, but not seeing how big gen-ai would be certainly was. After being in the field for more than 1.5 years, we had to internalize adaptivity and acceptance of change and think about a product strategy that expects often changes in models and infrastructure.

Conclusion

the conclusion is written by gpt-4 as a response to the article.

Embrace flexibility and be open to change. The AI landscape is rapidly evolving, and success often comes to those who are willing to adapt their vision based on new insights, user feedback, and technological advancements. Stay curious, be ready to pivot when necessary, and never lose sight of the value you aim to deliver to your users. This mindset will not only help you navigate the complexities of building an AI-driven startup but also enable you to innovate and lead in ways that truly make a difference.

-

Stas Shakirov

Co-founder & CTO

.jpg)